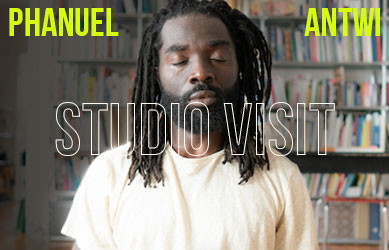

Interview by William Kherbek // June 12, 2019

Algorithms are, increasingly, turning up in the strangest and most dangerous places. From the micro-targeting of advertising, to the production of news stories, to the granting of parole to the incarcerated, algorithms are being integrated into areas of life that were previously considered the province of human decision-making. Dystopian scenarios are easy enough to construe; indeed, the title of a Jason Tashea article for Wired magazine offers a sense of the techno-nightmare that has become all too real for too many people: “Courts Are Using AI To Sentence Criminals. This Must Stop Now”. Tashea, along with journalists like Natasha Leonard and Sam Biddle, and researchers including Bev Skeggs and Safiya Umoja Noble, have sounded warning bells about the dangers of integrating largely opaque technologies into daily life. Artists, too, have begun to question the ways in which algorithms have “learned” about the world and are feeding poisoned harvest back to the human beings who created them.

The Dutch artist Harm van den Dorpel has long put technology at the centre of his practice. His recent works ‘Deli Near Info’—which organises popular images algorithmically—and ‘Death Imitates Language’—which creates “generations” of artworks descended from “parent” images via algorithmic recombination—both use the algorithm as a starting point for a larger discussion about the way machines learn and how we learn about ourselves via machines. As writers like Noble pose the question of how biases are inscribed and entrenched via the algorithm, the question of how machines learned, but also how machines (and their human progenitors) can unlearn toxic behaviours in an algorithmic age is an urgent question. We spoke to van den Dorpel about the vagaries of machine learning in visual art and the possibility of teaching algorithms to avoid learning the worst habits of human beings.

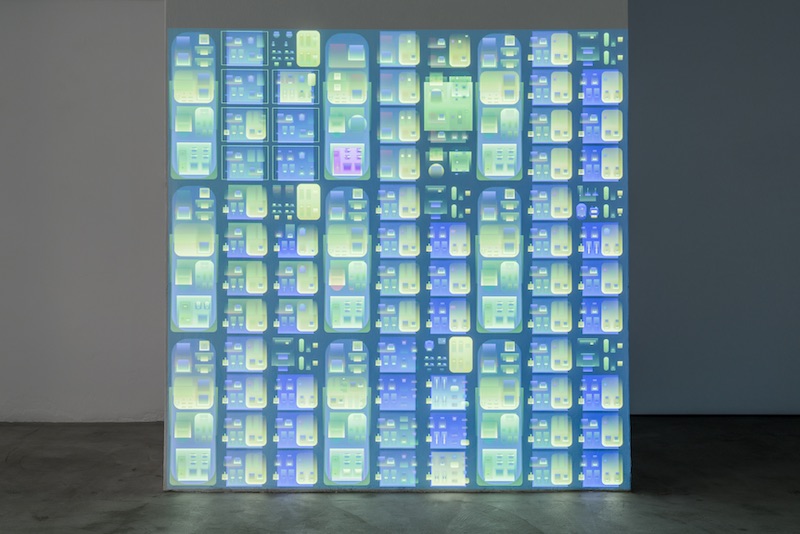

Harm van den Dorpel: ‘Structures of Redundancy’, 2019 // Photo by Dinis Santos, courtesy the Artist and Lehmann Silva Gallery Porto

Will Kherbek: Could you describe what first interested you in the algorithm as a potential aesthetic object?

Harm van Den Dorpel: It allows me to take advantage of the specific characteristics of algorithms, which are relentless repetition, allowing for a complexity that a human could not reach (I think). Also, there’s a certain systematic approach you can take, you can have optimisation improvements, all from a desire to reach new images that I might not have been able to reach if I had taken a more classical [artistic] strategy.

WK: Regarding the cultural implications of these forms of complexity, could you speak about how you see the algorithm playing a role in the cultural discussion more generally beyond your own work? Are there specific implications to an emergent algorithmically-driven cultural discussion?

HVDD: Computer art or algorithmic art—it’s often called generative art—is very old; as soon as computers were invented people tried to make images with them. Those works were very much based on random inputs: you get something random, like a new, surprising image because you give it [the programme] certain parameters, which is fun for a while, but gets boring because randomness has very little meaning, and there’s not much development over time. It’s always different, but it’s always the same.

I think more recent developments in computer science have allowed for more intelligence there. Of course, we know that neural networks are powerful, and the more recent generative art has had more of a direction instead of “always different, always the same”. Over time, you can develop something more surprising. In my work, I look at different algorithms from different times to see what the implications of those [algorithms] are visually, but, also, it’s kind of an archaeological interest that I have in the redundancy or the obsolescence of algorithms. I guess maybe my approach has an educational dimension, to show that algorithms are not exactly black boxes, but are interesting to look at from an aesthetic point of view and that they can be better understood if you visualise them.

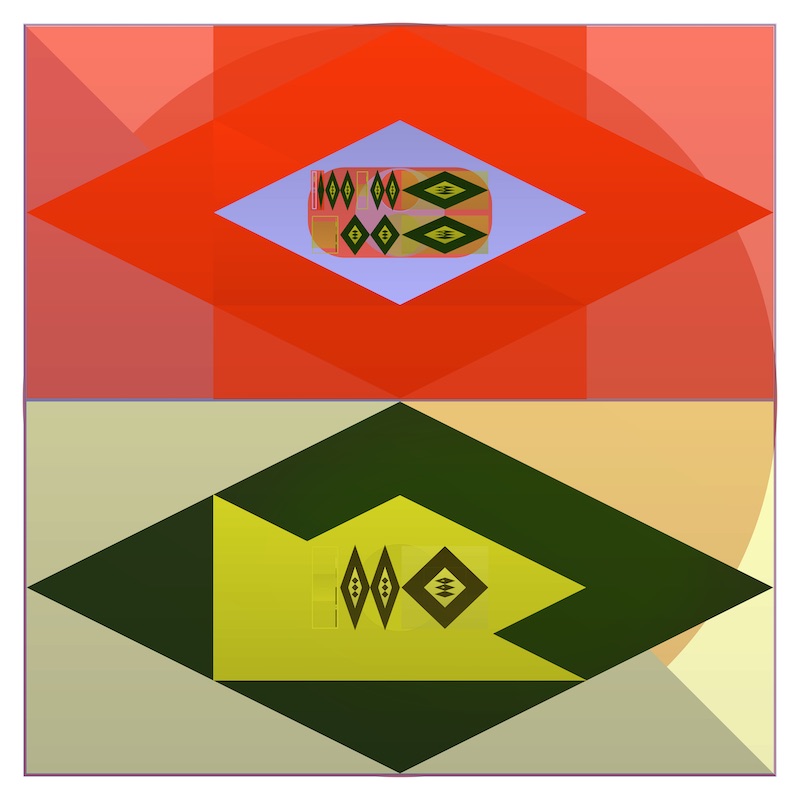

Harm van den Dorpel: ‘Symbol no aggression’, 2019 // Courtesy the Artist, Lehmann Silva Gallery Porto and Upstream Gallery Amsterdam

WK: In that our animating topic is “unlearning”, I wanted to ask a bit about the algorithmic “learning”. Would you characterise what’s happening in projects like ‘Deli Near Info’ and ‘Death Imitates Language’ as “learning”?

HVDD: It’s a good question. The AI that I use is very simple. If you look at evolutionary programming or genetic algorithms, you produce a population and you look at the best performing specimen in that population. You can take those, mutate them and perform crossovers to find new variations and look to see if any of those results are an improvement in fitness or interestingness or surprisingness, and over time you reach an optimum. While I do this [process], I often change the algorithm and I change the way that the specimens are displayed or materialised. So I’m learning while I do this, and I’m improving the algorithm.

WK: Having designed and created these generative algorithm-based works, what has the process taught you about the ways setting parameters matter to an algorithmic learning procedure?

HVDD: I think the data I give my algorithms—and, in a scientific situation, the data that you interpret and process—is extremely subjective. I once made a programme that had the possibility of generating images based on feedback. I’d programmed it, but I thought it was a bit too slow, so I thought I’d do it again in a different language to see what that would change. The code, in my mind, was the same, but the images it generated were so different because there are so many ways to programme something. You might not even be aware that a practical change in coding would have a meaningful repercussion in the output, but the signatures of the programmer can always be found in the algorithm; there’s no such thing as objectivity.

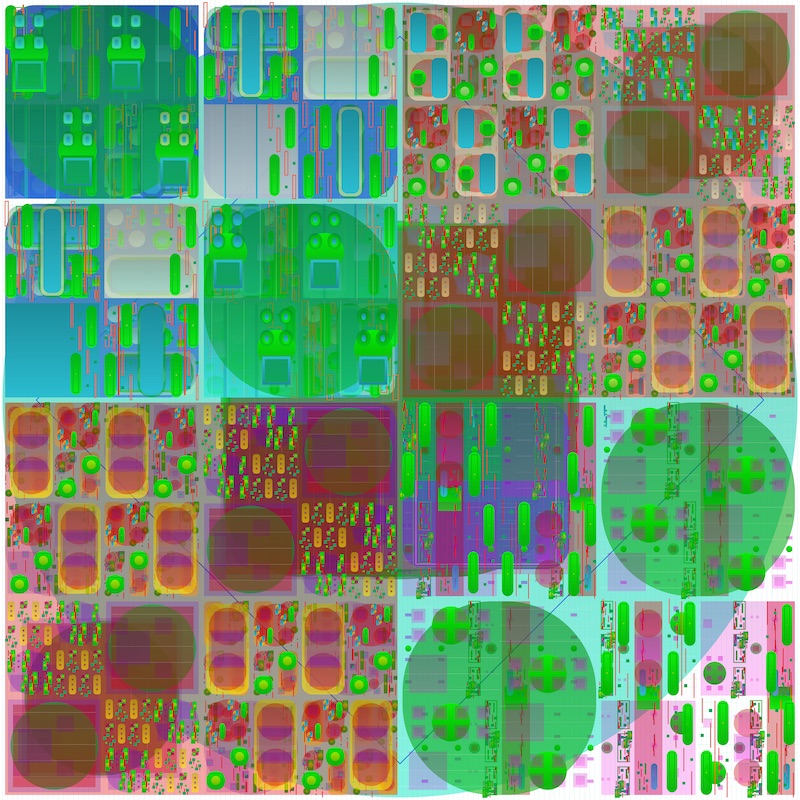

Harm van den Dorpel: ‘Decompress / Embrace Redundancy’, 2019 // Courtesy the Artist and Upstream Gallery Amsterdam

WK: In that algorithms are increasingly present in daily life but not very well understood, could you speak about the ways you consider the power hierarchies inscribed in society as an aspect of algorithmically-driven cultural and political dynamics?

HVDD: I think in every data set that comes from big data, even data sets created by users from divergent demographic backgrounds, you will always produce the lowest common denominator. Algorithms eliminate the weirdness and the exceptions, and I think, by default, that’s how neural networks work: they look for the thing that makes the most “sense,” and that is, in a way, very destructive and very boring in an aesthetic process. That’s why I use my own training data, because, to make a network sufficiently advanced, you need a lot of data to train it. If you take a data set that’s preexisting, you will get the common denominator which will be the thing that most people will like a little bit but never the thing that a few people will like a lot.

Harm van den Dorpel: Installation view ‘Uninnocent Bystander’, 2019 // Photo by Dinis Santos, courtesy the Artist and Lehmann Silva Gallery Porto

WK: As someone with a programming background, could you talk about some the dangers you see of algorithms being so integrated in daily life?

HVDD: I think there’s a big misconception in calling it “artificial intelligence” because there really is no intelligence there. Intelligence for human beings means having a complex decision-making process based on multiple parameters, applied on a case by case basis which really requires creativity and the ability to combine often seemingly unrelated factors. Neural networks are not like this at all. They are very simple functions with multiple layers, and they will very much behave similarly in different situations, assuming a certain single truth that is applicable based on its dogmatic learning of a system. Any kind of association, be it race or gender—in terms of reinforcing existing biases—will systematically and predictably make a connection once, and it will go on to do it forever. It disregards anything it doesn’t know about. I think in calling this procedure “intelligence”, people think there’s complex decision-making happening, but it is not that at all.

WK: Lastly, in terms of the question of unlearning, do you see ways in which algorithms might be useful in terms of information organising and distribution that might help humans unlearn their biases? We’ve talked a lot about how humans can write biases into algorithms, but can that process be reverse engineered?

HVDD: It depends on who’s in charge of the algorithms, whether it’s a government or a company. In a way, companies will be more honest because they have an interest in optimising profit, so they will make sure that if they have a bias that would jeopardise sales, they will try to unlearn that bias. If you talk about surveillance on the street, which might be connected to the prison industrial complex, I’m a little bit scared about this topic, because there’s a lot of money involved. These companies would benefit from bias, particularly a preexisting bias – for example, a racialised bias in society. So I think it’s in part about the idea of the Commons, and of who owns what.

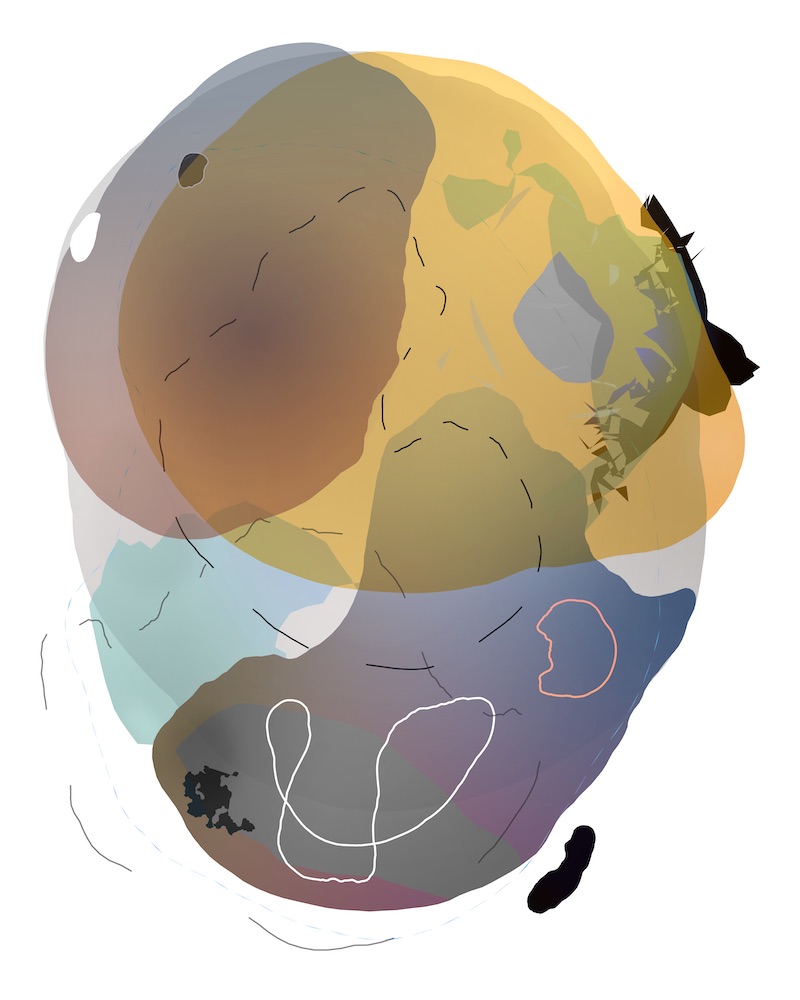

Harm van den Dorpel: ‘Unheard Estimator’, 2019 // Courtesy the Artist and Upstream Gallery Amsterdam

This article is part of our monthly topic of ‘Unlearning.’ To read more from this topic, click here.