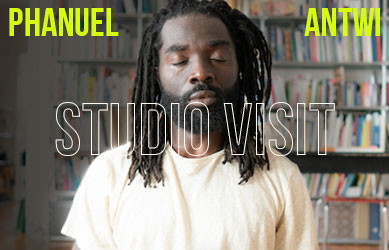

Interview by Lucia Longhi // Feb. 11, 2020

An increasing number of artists are reflecting on the role that technology and AI are playing in our lives. Elisa Giardina Papa’s research specifically cast a light on this crucial topic in relation to our emotional sphere, revealing the paradoxes inherent in devices and apps programmed for the care of our mental and emotional health, and self-optimization in general. Her work considers the symbiotic relationship between humans and technology, often stopping on liminal points: where the functionality of a human activity loses its nature and, precisely because of technology, turns into its opposite. Sleep, for example, becomes a moment of productivity, a source of data to be collected to optimize human functions. Emotions become an arid visual map that serve apps aimed at giving emotional support.

Her recent video and installation works focus on new ways to give and receive different types of care through the internet, while exploring the consequent overturning of the original premise that gave rise to these tools, many of which are tested on precarious workers under questionable conditions.

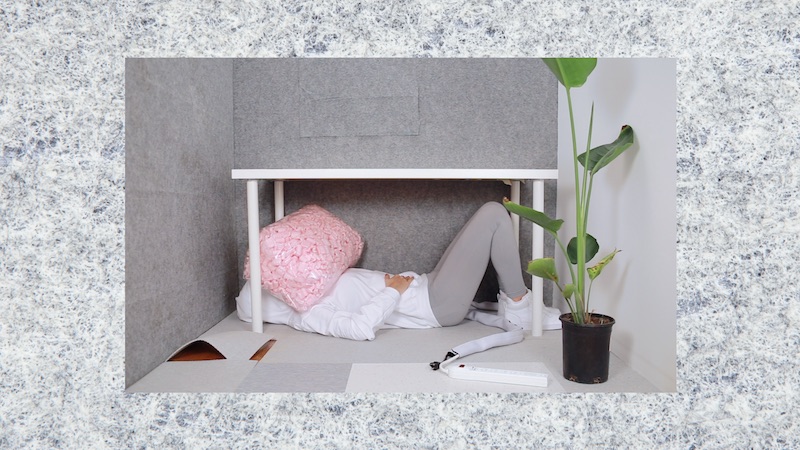

Elisa Giardina Papa: ‘Labor of Sleep, Have you been able to change your habits??’, 2017, video still // Courtesy of the artist

Lucia Longhi: Before talking about your recent works, I would like to ask you: how did you first become interested in technology and why did you decide to focus on this peculiar aspect (i.e. the emotional realm and the sphere of feelings)?

Elisa Giardina Papa: I am interested in our relationship to instrumentality at large, be it in relation to digital tools or mechanical and analog tools: a phone, a hammer, a pencil. What interests me most is the relentless tension between subjection and liberation that we experience each time we hold an instrument in our hands—the way in which we are constantly trapped between the dream of being master of that tool and the fear of becoming its servant. With algorithmic and AI-enhanced digital technologies, the question of agency is even more complicated. Am I looking at my phone, or is it looking at me? If I shoot one more selfie with it, will it shoot back at me?

LL: The trilogy ‘Technologies of care’ (2016), ‘Labor of Sleep, Have you been able to change your habits??’ (2017) and, most recently, ‘The Cleaning of Emotional Data’ (2020) explore how labour and care are reframed by digital economies and automation. Can you give us an overview on this work and an insight on the research at its core?

EGP: With the video installation ‘Technologies of Care,’ I tried to document the ways in which service and affective labor are being outsourced and automated via internet platforms. The video is based on conversations I had with online caregivers who, through a variety of websites and apps, provide clients with customized goods and experiences, erotic stimulation, companionship and emotional support. ‘Labor of Sleep, Have you been able to change your habits??’ (2017) focuses on the status of sleep within the tempo of present-day capitalism and engages the rhetoric of self-improvement apps. ‘Cleaning Emotional Data’ (2020) addresses the underpaid human labor involved in categorizing the massive quantities of visual data used to train emotion-recognition AI.

LL: You examine the relationship between digital care and the labour behind it. Now I’m thinking about your love relationship with the “Worker 7” (part of the work ‘Technologies of Care’), whom you are uncertain is a bot or a real person chatting with you as a job. Can you share your thoughts about this experience?

EGP: I didn’t know it at the time, but the app that I subscribed to initially began as merely a chat-bot service; only later, when the founders determined that it wasn’t convincing enough, did they switch to using gig workers. So when I was exchanging texts with my “invisible boyfriend,” I was actually connecting with approximately 600 writers (microtask-workers) who would interchangeably partner with my account to enable the fantasy of this tireless companion and love-giver. The majority of these workers are women, regardless of whether or not the client built the profile of the virtual boyfriend to be a male, and as part of their contract they are not allowed to talk about their work as financially remunerated labor. It is, therefore, gendered and effaced care work that demands that the worker participate in effacing herself as a subject.

LL: In your work the gendered division of labor is often addressed. Can you tell us your experience of this while researching for your art practice? What is, in your opinion, the impact that the internet and technology-related jobs are having on the gender gap?

EGP: With ‘Technologies of Care,’ I tried to trace how pre-existing inequalities in care work—the feminization of caregiving paired with its lack of recognition as waged work and the historical division of labor between Global North and Global South—have been both exacerbated and obscured by digital economies. The ways in which these new forms of labor are entrenched in patriarchy and racial capitalism is clearly diagnosed in Neda Atanasoski and Kalindi Vora’s ‘Surrogate Humanity: Race, Robots, and the Politics of Technological Futures.’

LL: The new video installation ‘The Cleaning of Emotional Data,’ recently on view in the exhibition ‘Hyperemployment’ at Aksioma – Institute for Contemporary Art, Ljubljana, focuses on the new forms of precarious labour emerging within artificial intelligence economies: massive quantities of visual data need to be collected and processed—by human workers—to train emotion recognition algorithms. You decided to experience this phenomenon in the first person and become one of those microworkers who “clean” data to train machine vision algorithms. What were your tasks in this job and how did you feel while doing this?

EGP: Yes, in the winter of 2019, while living in Palermo and researching affective computing systems, I ended up working remotely for several North American “human-in-the-loop” companies. Among the tasks I performed were the taxonomisation of human emotions, the annotation of facial expressions and the recording of my own image to create datasets for AI systems that supposedly interpret and simulate human affects. While doing this work, some of the videos in which I recorded my emotional expressions were rejected. It seems that my facial expressions did not fully match the “standardised” affective categories provided to me. I was never able to learn whether this rejection originated from an algorithm or, for example, from another gig worker who, perhaps due to cultural differences, interpreted my facial expressions in a different way.

Elisa Giardina Papa: ‘Cleaning Emotional Data,’ 2020, installation view, Aksioma Institute for Contemporary Art, Ljubljana, Slovenia, textile pieces developed in collaboration with Michael Graham of Savant Studios // Photo by Jaka Babnik/Aksioma

LL: Technology, in particular the ones you deal with in your latest works (sleep apps, dating apps, self-care help technologies) have been designed to be at the service of human well-being, as an instrument for our care. Now, paradoxically, the type of work needed for its improvement may lead to the same illnesses and disorders those apps try to cure. A short circuit is created. Is this also what the work is about?

EGP: Yes, these apps are a new “pharmakon”— the Greek term signifying both a poison and its remedy—in the sense that they operate through an inherent duplicity whereby they provide a remedy for what they themselves take away. Constant connectivity and increasing habituation to 24/7 techno-capitalism dispossesses humans of “natural” sleep cycles and opens up new scenarios for sleep disorders, but they also simultaneously present us with remedies to re-optimize whatever hours of sleep we have left. That is: we sleep less, but we might learn how to sleep faster.

LL: While trying to detect, understand and simulate human affects and emotions, these programs also produce a flattening and a standardisation of something that has always been anything but standardizable, an inner and distinctive characteristic of the human being and also, of different cultures. The word “cleaning” in the title also indicates something wrong that needs to be removed?

EGP: A number of AI systems, which supposedly recognize and simulate human affects, base their algorithms on flawed understandings of emotions as universal, authentic and transparent. Increasingly, tech companies and government agencies are leveraging this prescribed transparency to develop software that identifies, on the one hand, consumers’ moods and, on the other hand, potentially dangerous citizens who pose a threat to the state. The problem, of course, is that hegemonic demands for legibility and transparency are never devoid of political implications. And, more specifically, a requirement for emotional legibility can recall a gender- and racially-based history in which, as Sara Ahmed has shown, the ability to be in control of emotions and to “appropriately” display them has come to be seen as a characteristic of some bodies and not others.

This article is part of our monthly topic of ‘Wellness.’ To read more from this topic, click here.

Artist Info

Elisa Giardina Papa: ‘Technologies of Care,’ 2016, installation view, XVI Quadriennale D’Arte, Palazzo delle Esposizioni, 2017 // Courtesy of the artist