by Lorna McDowell // Oct. 31, 2023

This article is part of our feature topic Privacy.

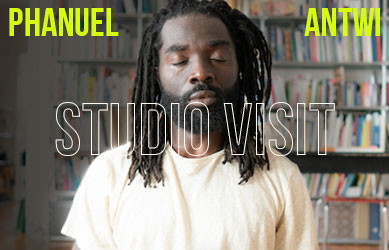

K Allado-McDowell is a writer, speaker, musician and the first writer-in-residence at Berlin art institution Gropius Bau. They established the Artists + Machine Intelligence program at Google AI and are at the forefront of working with AI as a creative tool. Allado-McDowell has authored a number of books in collaboration with GPT-3, including this year’s ‘Air Age Blueprint’—a story about a young filmmaker and a Peruvian healer that weaves “fiction, memoir, theory and travelogue into an animist cybernetics.”

We are just at the beginning of understanding the capabilities of AI as a tool for creative expression, and there are legitimate fears about privacy and data extraction, as well as an uneasiness about being confronted by systems that talk and behave like humans. In this interview, Allado-McDowell argues that, rather than fearing hypotheticals, we can harness AI to address the very real climate and extinction crisis, and that we already exist within complex ecologies of intelligence, both human and nonhuman.

K Allado-McDowell, portrait // Photo by Ian Byers-Gamber, courtesy of K Allado-McDowell

Lorna McDowell: You’re the first writer-in-residence at Gropius Bau. What does it mean for you, as an author and a musician, to be thinking and writing within an art institution?

K Allado McDowell: Well, I’ve done almost all of my work within the context of art and I’ve found that context really provides a lot of freedom to be more experimental and to think with multiple senses and with multiple modes of cognition. I’ve found that art audiences respond to my work differently than other audiences—there’s an ability to entertain speculation and just work outside of literary conventions, which is really nice. Having said that, I am writing in a pretty straightforward way for Gropius Bau. Their audience is maybe a more mainstream one because they serve the public in a different way to those writing for an audience that are very familiar with AI.

I’ve been thinking about the social and psychological implications of AI mostly in terms of design, but with Gropius Bau I’m trying to write something that will help people understand some fundamental concepts around AI and I’m trying to communicate those very clearly. In the past, I wouldn’t say that my writing hasn’t been clear, but that I’ve used more experimental techniques, or maybe my previous writings are more easily contextualised within experimental literary histories. Now, I’m not using AI in the writing, whereas in the past I’ve written a lot with AI and that’s been a means of embodying and performing some of the concepts that I write about. In this case, I’m trying to slow it down and write something that the average museum visitor will be able to approach without needing to know a lot about the field of AI.

LM: What are your thoughts on data privacy and its potential impact on tools like Chat GPT in the future? Do you think privacy as a concept will evolve along with AI?

KAM: I’ve been thinking and working on concepts around privacy for a long time with AI and one of the things that’s really clear, when you start to use AI everyday, is that in order for it to be a helpful tool, it needs to understand people and it needs to understand individuals. Right now, when you use a chatbot, the majority of them don’t keep a record of your interactions in the large context. When you and I interact, I start to remember the things that we’ve done together, the conversations we’ve had, and that sets up the context for the current conversation and the current interaction.

If we want AI tools to be more useful, they need to understand us better as individuals and how we live in community, and that we are part of larger networks. And to do that there needs to be some form of privacy, because that kind of information is private. In order for that to be the case we need to have different structures—so there are technical methods for creating the kind of privacy that enables models to learn at scale, while preserving individual privacy. Federated learning is one of those techniques, whereby statistics are used to protect identities and still learn from the broad set of human interactions. You mentioned ChatGPT as an example. There is nothing like that in ChatGPT and there is also no memory. I think we will see a need for memory, especially as things like AI assistants become more common. It’ll start to become obvious that privacy is really important for those use cases.

Now, one of the issues is that these models all run on servers. So when you interact with it, you’re sending data to the server that is doing your calculations, not on your local device. So we will see more system designs where AI is running locally and it can be encrypted. This will introduce the idea of a sort of zone of privacy around information, something that has been formulated and thought about for many years. The sudden popularity of ChatGPT has really changed the landscape. Before it was introduced, a lot of companies were hesitant to put models out there that would use such information. Competition has produced a situation where companies are willing to take more of a risk. Now, if you put personal identifying information into a conversation with a chatbot, that may end up in a training set. Despite what companies say, there is no way to ensure that the information is not legible to the system and isn’t being used as training data. So the idea of running AI locally or in a privacy-preserving way needs to be built into the infrastructure of the system.

K Allado-McDowell: ‘Air Age Blueprint,’ cover artwork by Somnath Bhatt, published by Ignota // © K Allado-McDowell and Ignota

LM: You’ve likened the creative process in your co-writing with AI to composing music. Some artists are concerned about “losing” their work to AI-generated media. What considerations do you think need to be made in the context of art making in collaboration with AI?

KAM: In interacting with AI, you’re in a collaborative conversation. I use the metaphor of music because to me, writing with AI, especially in my first book ‘Pharmako-AI,’ felt a lot like musical improvisation. So passing phrases back and forth, the AI responding to my input and me responding to its input, in the same way that musical improvisers can build a phrase or a melody. There are more subtle effects also, in the way the collaborators influence each other. As a musician, I may pick up a style or a lick, or a vibe, from another musician that would influence how I play in the future. Similar things are happening with AI and the influence is present.

One of the things for artists and anybody working with these systems to be aware of is the influence that has on their creativity and their voice. So we can certainly develop a voice with these tools, just like other tools shape our expressive creations. We need to maintain and discover the voices that are possible with these tools. A common perception or understanding of AI imagery is that it all looks the same or very similar. It’s kind of reproducing the most common aspects of a certain style, image, or the way an object is represented in photography, illustration or art. I think to be satisfied as an artist requires finding one’s own voice within these tools. And I think that we’re starting to see what that looks like. It is a new tool and people need to find new ways of expressing with it that feel unique to them. That can come through the context in which the work is presented, the narratives that are present in it, the concepts in it, the visual style.

Image-generating AI is very hard to control and requires that we develop a new language to work with it. It’s actually quite difficult compared to other media, where we already understand what it means to develop your own place through drawing, painting or sculpture. We’ve had thousands of years to understand what that means. With AI tools, it can be easy to think you found something really unique and interesting and then discover that other people have found that same thing. You have to ask, what is it about me as an artist that makes this meaningful? Or how does this feel specific to me? When we look at the work of an artist, like Jackson Pollock or Andy Warhol, you still see the specifics of their hand, their intention and their creative voice in those works. Warhol is a great example because he used pop iconography, screen-printing and mass media communication tools, and still was able to do something that felt distinct to him. I trust that artists will do that. But, you know, these tools are very recent, so I think over time we’ll start to see what generative AI really means as an artistic tool.

K Allado-McDowell: ‘Pharmako AI,’ cover artwork by Refik Anadol, published by Ignota // © K Allado-McDowell and Ignota

LM: In your article ‘Designing Neural Media’—the first in your three-part series for Gropius Bau—you question how the influence of neural media (AI and its structures) might be used to reshape our selves and society toward the most beneficial ends. What are your thoughts on how we might best approach designing neural media capable of this?

KAM: There’s a lot of conversation happening right now about AI safety, the risks that AI poses. And one of the things I haven’t seen addressed very thoroughly is the subjective and psychological effect. There have been multiple stories in the news about early interactions with AI that become something like canonical, for instance Kevin Roose and the other examples I give in the article, such as Blake Lemoine—who interacted with AI systems in a way that made him question whether the system was sentient or conscious and what needed to be done, and called into question core beliefs about what it means to be human. So when confronted with a system that behaves like a human, we can respond in multiple ways, but one of the common early responses is to ask the question: is this system that acts like a language-using human being conscious in the same way as we are? Another response among those who work with AI is to question what makes humans unique, and to ask if maybe we are not also similar to AI in how we function. I propose that these are reductive responses. You can see the AI as human-like or humans as AI-like, but I think a more nuanced reading is that there are networks of intelligence operating in a distributed fashion in every situation. We, ourselves, are not strictly just human.

I often see in our responses to AI a fascination with nonhuman intelligence. And whenever I uncover that, it strikes me as somewhat ironic given that we are always surrounded by nonhuman intelligence in plants and animals, the ecosystem. So, if we were to take a step back and look at the larger picture where human intelligence exists alongside nonhuman intelligence already through biology and ecology and through our interspecies relationships, might we think about AI that way? And, in doing so, would that reveal intelligence that is already present or reveal a way to address our alienation from nature and from each other?

AI illustration, 2023 // Courtesy of K Allado-McDowell

LM: On this note, one of your main research interests during the residency is how technology can enable interspecies futures. Can you elaborate on this?

KAM: To me, the idea of interspecies applications of AI will include training and models to understand the expressions of nonhumans, like the work that is being done to understand cetacean expressions, of whales and dolphins. An area of research suggests that AI can be used to understand nonhumans and that AI can be a tool that brings our attention outside of strictly human concerns. We can use AI to understand nature better, to understand the patterns in nature better and our role in nature, to understand what we’re doing to the environment, or we can use it to amplify our own interests. [Better understanding nature] can happen through observation of weather patterns, observation of animal migration, all those forms of attention that allow us to see outside of ourselves.

Ultimately, we can ask: how can I be more productive? How can I get more attention on my social media accounts? How can I make the perfect viral video? Not that these things are bad necessarily, but if AI as a cognitive magnifier is pointed strictly at ourselves, we will only get more of ourselves. I think that, right now, that’s the limit of the conversation. There’s an almost hysterical conversation happening right now about what AI could do to us in the future and the idea that it might cause human extinction. To me, that stands in stark contrast to the existing extinction of nonhumans that is happening all over the planet. And I can’t help but wonder why we’re so focused on hypothetical future extinctions because of AI when we know that there are extinctions happening already. We’ve lost so much of the animal population in the last 50 years. To me, if there’s one thing that we should be using AI for, it should be in addressing the climate and extinction crisis. Because that really is our primary concern.

If we’re worried that AI is going to cause us to go extinct, we need to be looking through a relational lens at the role we play with other nonhuman species on Earth and AI can actually help with that. There’s an opportunity here, especially at this point in the development of AI, to look at what we’re not discussing and how AI can be useful there.